Data labeling is the foundation upon which machine learning models are built. Accurate, well-labeled data is critical for training models that are effective and reliable. This article explores various strategies for optimizing the data labeling process, focusing on manual annotation, semi-supervised learning, and crowdsourcing. Each method has its unique advantages and can be tailored to different needs and resources of AI projects.

1. Manual Annotation: Precision at the Cost of Time

Manual annotation involves human annotators labeling data based on their judgment. This approach is often used when high accuracy is critical and the data is complex or sensitive.

Benefits:

- High Quality and Accuracy: Human annotators can understand nuanced differences and complexities that machines currently cannot.

- Customization: Allows for specific, detailed instructions and criteria tailored to the project’s needs.

Drawbacks:

- Scalability: Time-consuming and costly, especially for large datasets.

- Consistency Issues: Variability in human judgment can lead to inconsistencies in data labeling.

Best Practices:

- Clear Guidelines: Develop comprehensive annotation guidelines to ensure consistency.

- Regular Training: Hold training sessions to calibrate understanding among annotators.

- Quality Checks: Implement periodic reviews to correct errors and refine the process.

2. Semi-Supervised Learning: Balancing Automation and Accuracy

Semi-supervised learning uses a small amount of labeled data alongside a large amount of unlabeled data. This method is beneficial when labeling resources are limited.

Benefits:

- Efficiency: Reduces the need for labeled data, making the process faster and less resource-intensive.

- Improved Learning: Can improve model accuracy over purely supervised or unsupervised methods.

Drawbacks:

- Dependency on Initial Labels: The quality of the labeled data significantly impacts the model's performance.

- Complexity in Implementation: Requires sophisticated algorithms and tuning to get right.

Best Practices:

- Start with Quality: Ensure the initial labeled dataset is accurately and comprehensively labeled.

- Iterative Process: Use an iterative approach to refine labels and improve the model progressively.

- Use of Algorithms: Employ algorithms like co-training or transductive support vector machines that are suited for semi-supervised learning.

3. Crowdsourcing: Leveraging the Crowd for Large-Scale Labeling

Crowdsourcing involves distributing the task of labeling to a large, often geographically dispersed group of people. This method is used for projects where rapid data collection is required.

Benefits:

- Scalability: Can quickly label large datasets with the help of a vast network of annotators.

- Cost-Effective: Generally cheaper than professional annotation, especially for less complex tasks.

Drawbacks:

- Quality Control: Maintaining high quality and consistency can be challenging.

- Privacy Concerns: Sharing sensitive or proprietary data with a large crowd can pose security risks.

Best Practices:

- Hybrid Approaches: Combine crowdsourced data with expert reviews to ensure quality.

- Incentive Alignment: Design tasks and rewards to align the interests of the crowd with the desired outcomes.

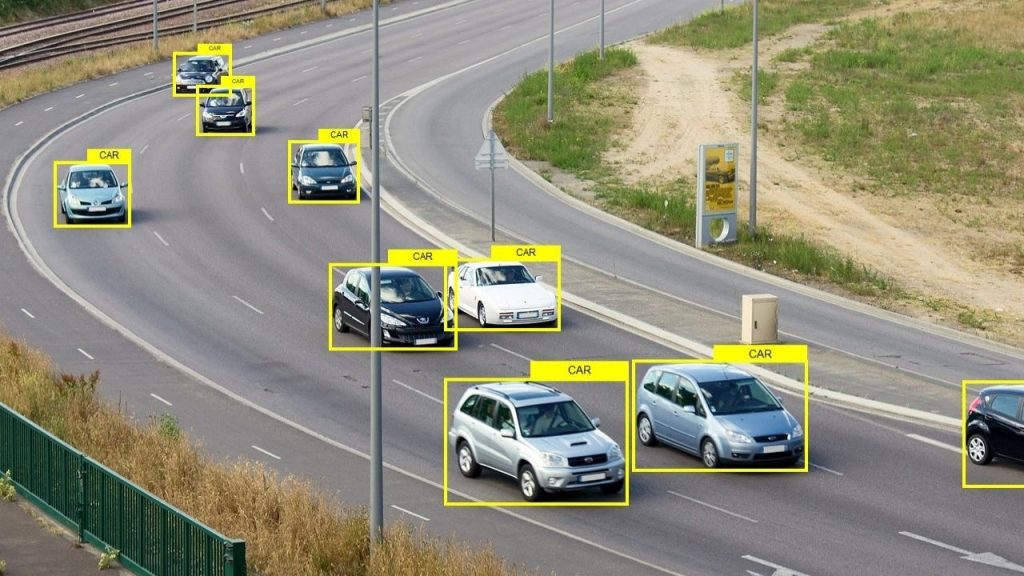

4. Professional Data Labeling Services

Professional data labeling services are critical in the development of effective AI applications. By ensuring high-quality, accurately labeled datasets, these services help optimize the performance of AI models, leading to more reliable and effective solutions. As AI continues to evolve and integrate into various sectors, the role of specialized data labeling companies will become increasingly important, underpinning the success of AI-driven innovations.

Partnering with a reliable and experienced data labeling service provider like Kotwel can greatly enhance the capabilities of AI systems, ensuring that they deliver the best possible outcomes in real-world applications.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

Frequently Asked Questions

You might be interested in:

Data labeling is a critical component of machine learning that involves tagging data with one or more labels to identify its features or content. As machine learning applications expand, ensuring high-quality data labeling becomes increasingly important, especially when scaling up operations. Poorly labeled data […]

Read MoreMachine learning models are only as good as the data they learn from, making the quality of data labeling a pivotal factor in determining model reliability and effectiveness. This blog post explores the concept of consensus-based labeling and its crucial role in enhancing trust […]

Read MoreContinuous learning in artificial intelligence (AI) is an essential strategy for the ongoing enhancement and refinement of AI models. This iterative process involves experimentation, evaluation, and feedback loops, allowing developers to adapt AI systems to new data, emerging requirements, and changing environments. This article […]

Read More