Data labeling is a critical component of machine learning that involves tagging data with one or more labels to identify its features or content. As machine learning applications expand, ensuring high-quality data labeling becomes increasingly important, especially when scaling up operations. Poorly labeled data can lead to inaccurate models and skewed results, making quality assurance essential. This article explores key strategies for maintaining accuracy and consistency in data labeling efforts as they scale.

Ensuring Quality Assurance

1. Automated Validation Checks

Automated tools play a crucial role in maintaining labeling accuracy by quickly identifying and correcting errors. Implementing automated validation checks can:

- Pre-check Logic: Integrate logic checks that automatically verify the plausibility of labels against predefined criteria.

- Real-time Feedback: Provide labelers with instant feedback on their inputs, helping to correct mistakes promptly.

2. Inter-Rater Agreement Assessments

Inter-rater agreement measures the consistency of labels among different annotators and is vital for quality assurance.

- Kappa Statistics: Use statistical tools like Cohen's Kappa to measure agreement levels and identify discrepancies.

- Training and Calibration: Regular training sessions can align labelers’ understanding and approach, enhancing consistency.

3. Continuous Monitoring of Labeling Performance Metrics

Ongoing evaluation of performance metrics ensures that the quality of data labeling does not decline over time.

- Quality Control Dashboards: Implement dashboards that track key performance indicators such as speed, accuracy, and agreement metrics.

- Regular Audits: Schedule periodic reviews and audits of labeled data to ensure ongoing compliance with quality standards.

Advanced Strategies for Scaling

1. Layered Review Processes

As operations scale, implementing a multi-tier review process can help manage the increased workload and maintain quality.

- Hierarchical Review: In a tiered review system, initial labels are checked by senior annotators to correct errors and refine data.

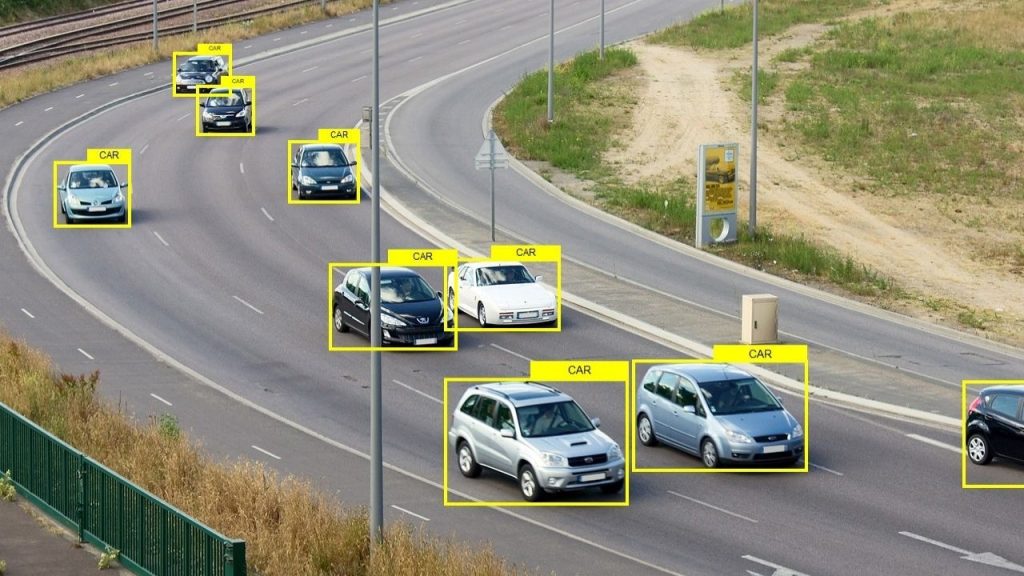

2. Machine Learning Assistance

Utilize machine learning models to pre-label data, which can:

- Boost Efficiency: Accelerate the labeling process by allowing human annotators to focus on verifying and correcting machine-generated labels.

- Enhance Accuracy: Combine human expertise with algorithmic consistency for improved label quality.

3. Crowdsourcing with Control

Crowdsourcing can rapidly scale data labeling efforts, but it requires careful management to maintain quality.

- Controlled Crowdsourcing: Implement rigorous selection criteria for crowd workers and maintain a high level of oversight and regular feedback.

In summary, quality assurance in data labeling is pivotal for the development of robust and reliable machine learning models. As you scale your data labeling efforts, integrating automated validation checks, ensuring inter-rater agreement, and continuously monitoring performance metrics are essential strategies. Additionally, exploring advanced methods like layered reviews, machine learning assistance, and controlled crowdsourcing can further enhance the accuracy and consistency of your data labels. By prioritizing quality assurance, organizations can safeguard the integrity of their data inputs and pave the way for successful AI implementations.

High-quality Data Labeling Services at Kotwel

Recognizing the challenges and importance of maintaining high-quality data labeling, Kotwel offers specialized data labeling services tailored to meet the growing demands of AI and machine learning projects. Our expert team, equipped with cutting-edge tools and processes, ensures that your data labeling scales efficiently without compromising on accuracy or consistency, setting the foundation for your AI initiatives' success.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

Frequently Asked Questions

You might be interested in:

Machine learning models are only as good as the data they learn from, making the quality of data labeling a pivotal factor in determining model reliability and effectiveness. This blog post explores the concept of consensus-based labeling and its crucial role in enhancing trust […]

Read MoreContinuous learning in artificial intelligence (AI) is an essential strategy for the ongoing enhancement and refinement of AI models. This iterative process involves experimentation, evaluation, and feedback loops, allowing developers to adapt AI systems to new data, emerging requirements, and changing environments. This article […]

Read MoreThe field of artificial intelligence (AI) is evolving at an unprecedented pace, driven significantly by innovations in how we generate, manage, and utilize training data. As AI systems become more integral to a variety of applications-from healthcare and finance to autonomous driving and personalized […]

Read More