What is Video Annotation for object detection?

Video annotation is a computer vision technique where the user identifies objects in videos. The aim of this technique is to automate video annotation by coupling it with a machine-learning algorithm.

How does it work?

It involves two phases: the training phase, where the video dataset is annotated with object labels, and the prediction phase, where a machine-learning algorithm is trained to identify an object by using the previously labeled datasets. The prediction phase involves two sub-steps; i.e., object detection and bounding box estimation.

How does the training phase work?

During the training phase, a user assigns labels to predefined regions of the video, which is later used to label an unseen region by the machine-learning algorithm. The bigger the number of annotations or labeled regions, the more accurate will be the object detection algorithm.

The training phase is a two-step process. In step 1, a user manually annotates videos with predefined regions (e.g., white boxes). In step 2, a supervised learning algorithm is used to learn the concept of object, for example, by detecting shapes, colors and landmark objects.

What is the prediction phase?

In the aforementioned training process, we need to label our videos with 'object' labels in order for the computer-vision software(s) to use them as training sets and to detect shapes/boxes/bounding box information from videos. Therefore, we need a pre-trained classifier or object detector. More specifically, a neural network and/or other machine learning algorithms are used to detect objects and predict the bounding box coordinates of an object.

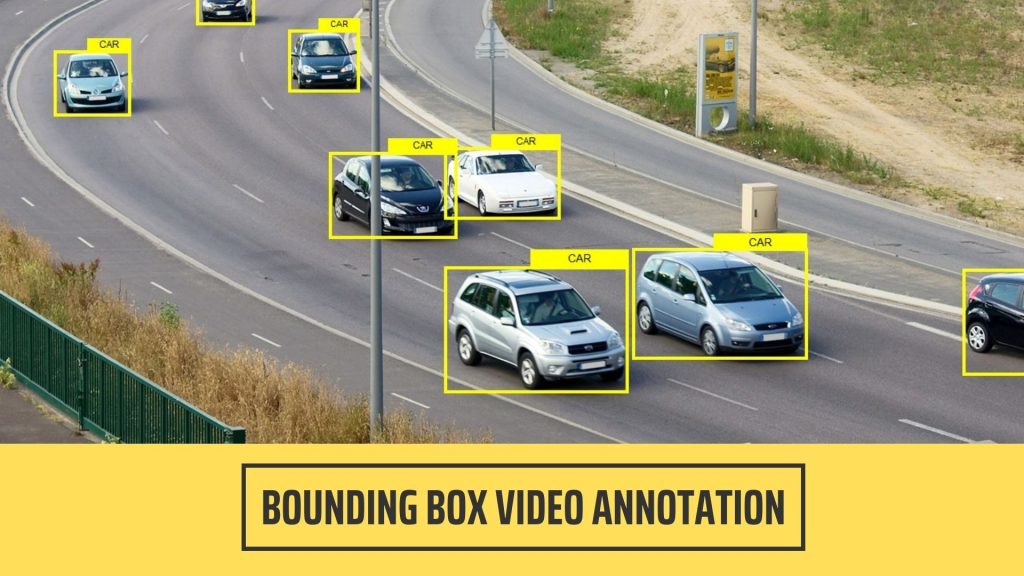

How does the bounding box estimation work?

Once a computer vision algorithm detects an object from video frames, it predicts a bounding box around the detected object. A bounding box is the smallest area that encloses all the points in an object. The only exception is when an object's shape can be inferred by its appearance in different frames.

Where can I get video annotations?

The Open Video Annotation dataset (OVAD) is a public dataset of videos with annotation boxes. It includes 25,000 videos annotated by thousands of users, and has over 2 million annotations and 2 million annotations recorded across five languages; English, Spanish, Portuguese, French and Italian.

The data is organized into a single CSV file, which can be downloaded here. After it's downloaded and extracted from the archive, one can view the structure of it using Python.

What can I use this dataset for?

The goal of an object detection system to localize the object in the image so it can be classified. The localization process is called detection and the result is known as a bounding box around the object. The OVAD dataset currently contains over 2 million annotations from thousands of users. As such, you can use this dataset to train your own object detection system or use it directly as input for another system's training process.

High-quality Video Annotation Service at Kotwel

Video Annotation can be a time-consuming and costly task. However, there are companies that offer video annotation service to customers who wish to have their images annotated quickly with a reasonable price. For example, Kotwel is one such company that can save you hours and costs on video annotation. At Kotwel, we can help you with data annotation tasks for text, image, video datasets. Get in touch with us below to learn more about our solutions and services.

Kotwel is a reliable data service provider in Vietnam, offering custom machine learning solutions, high-quality AI training data for machine learning and AI. It provides data services such as data collection, data annotation and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.