In Machine Learning, data quality profoundly influences not just model accuracy but also its generalization, fairness, interpretability, and scalability. This article explores these impacts with real-world examples and case studies, highlighting how data quality is a critical success factor in machine learning applications.

Model Generalization

Model generalization refers to a machine learning model's ability to perform well on new, unseen data. The foundation of robust model generalization is high-quality data. A dataset riddled with inaccuracies, inconsistencies, or biases will inevitably lead to a model that performs well on training data but fails spectacularly when exposed to the real world. For instance, in healthcare, a model trained to diagnose diseases from medical images may excel in lab settings but falter in real-world applications if the training data did not encompass a diverse range of patient demographics and imaging technologies.

Fairness

Fairness in machine learning is about ensuring that models do not propagate or amplify biases present in the data. Data quality significantly impacts fairness; biased or skewed datasets can result in models that discriminate against certain groups. An infamous example is the COMPAS software, used by courts in the United States to predict recidivism risk. The data fed into COMPAS was biased against African-American defendants, leading to higher false positive rates for this group compared to white defendants.

Interpretability

Interpretability is the extent to which a human can understand the cause of a decision made by a machine learning model. High-quality data can enhance interpretability by ensuring that models learn from genuine, understandable patterns rather than spurious correlations. For example, a model predicting loan approval rates might focus on irrelevant features like the application submission time if the data contains such biases, making it harder for humans to understand and trust the model's decisions.

Scalability

Scalability in machine learning refers to the ability of a model to maintain or improve performance as the size of the dataset increases. Data quality directly influences scalability; noisy or incomplete datasets can lead to the "curse of dimensionality," where the addition of data points does not lead to performance improvements due to the poor signal-to-noise ratio. Conversely, high-quality, well-curated datasets can enable models to learn more effectively, enhancing their scalability.

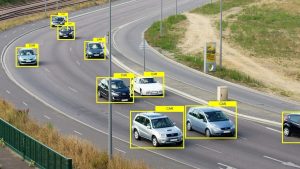

Real-World Example: Image Recognition

A compelling case study in the impact of data quality on machine learning is the development of image recognition technologies. Early image recognition models struggled with tasks that humans found trivial, such as distinguishing between cats and dogs. The breakthrough came not just from algorithmic advancements but significantly from improvements in data quality. Large, well-labeled, and diverse image datasets like ImageNet allowed models to learn from a wide range of examples, leading to dramatic improvements in performance. This example underscores the critical importance of data quality across all facets of machine learning.

Data quality is not merely a technical requirement but a strategic asset in machine learning. It influences every aspect of a model's performance and its alignment with ethical standards. By investing in high-quality data through trusted partners like Kotwel, businesses can not only enhance the effectiveness of their AI applications but also ensure they are fair, understandable, and scalable. This commitment to quality helps pave the way for innovative and responsible AI solutions that can truly transform industries.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

Frequently Asked Questions

You might be interested in:

Data labeling is a critical component of machine learning that involves tagging data with one or more labels to identify its features or content. As machine learning applications expand, ensuring high-quality data labeling becomes increasingly important, especially when scaling up operations. Poorly labeled data […]

Read MoreMachine learning models are only as good as the data they learn from, making the quality of data labeling a pivotal factor in determining model reliability and effectiveness. This blog post explores the concept of consensus-based labeling and its crucial role in enhancing trust […]

Read MoreContinuous learning in artificial intelligence (AI) is an essential strategy for the ongoing enhancement and refinement of AI models. This iterative process involves experimentation, evaluation, and feedback loops, allowing developers to adapt AI systems to new data, emerging requirements, and changing environments. This article […]

Read More