High-quality data labeling is crucial for training effective machine learning models. The accuracy of the labels directly influences the model's performance, as "garbage in" will invariably lead to "garbage out." This article outlines strategies for ensuring high labeling quality, addressing the challenges of labeling discrepancies, and offers solutions for resolving disagreements among labelers.

1. Importance of High-Quality Labels

The foundation of any machine learning project is its data. For supervised learning, specifically, the labels assigned to training datasets teach the model what patterns to recognize and what outputs to generate. Inaccurate or inconsistent labeling can mislead the model, resulting in poor generalization on unseen data.

2. Strategies for Quality Control

A. Multiple Labelers

Using multiple labelers for the same dataset can significantly enhance label accuracy. This approach allows for:

- Cross-verification: Different labelers can cross-check each other’s work, ensuring that labels are accurate and consistent across the board.

- Error reduction: With more eyes on the data, the likelihood of errors decreases as discrepancies are more likely to be caught and corrected.

B. Consensus Techniques

When labelers disagree, consensus-building techniques can help reconcile differences:

- Majority Voting: This is the simplest form of reaching consensus. The label that the majority of labelers agree on is chosen.

- Expert Adjudication: In cases where labelers cannot agree, an expert in the dataset’s domain can be called upon to make the final decision.

- Weighted Voting: Labelers with a history of accuracy can be given more weight in their labeling decisions.

C. Use of Annotation Guidelines

To minimize the variability among labelers:

- Clear Guidelines: Detailed annotation guidelines should be provided to all labelers to ensure everyone is on the same page regarding what each label means and when it should be used.

- Regular Training: Regular training sessions can help clarify any doubts about the guidelines and improve the overall consistency of the labeling.

D. Quality Checks

Regular audits and quality checks are essential:

- Random Sampling: Regularly review a random sample of labeled data to check for accuracy.

- Inter-Annotator Reliability (IAR): Statistically measure the agreement among multiple annotators to identify potential discrepancies or misunderstandings in the labeling instructions.

3. Leveraging Technology

A. Automated Quality Control

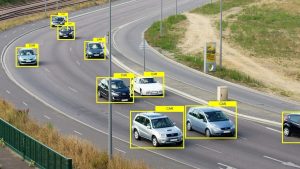

Machine learning tools can pre-process data to flag inconsistencies or outliers for human review, speeding up the quality control process.

B. Machine-Assisted Labeling

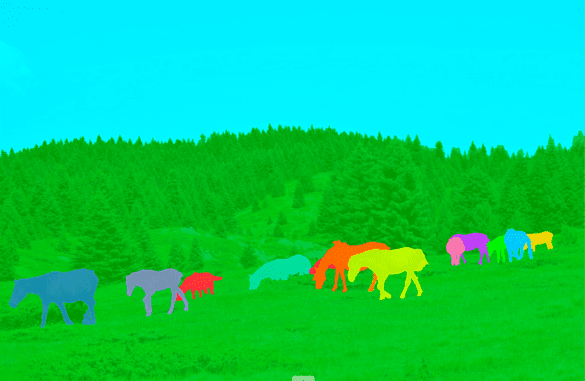

Semi-automated labeling tools can suggest labels based on previously learned data patterns, reducing the cognitive load on human labelers and speeding up the labeling process.

4. Continuous Improvement

A. Feedback Loops

Creating a mechanism for labelers to provide feedback on the labeling guidelines and tools can help continuously refine the process, tools, and guidelines.

B. Iterative Refinement

The labeling process should be iterative, allowing labelers to refine labels based on feedback from ongoing model training and validation results.

5. Challenges and Solutions

A. Resolving Labeling Discrepancies

Discrepancies are inevitable but can be managed through:

- Structured Communication: Regular meetings and discussion forums where labelers can discuss challenging cases and reach a common understanding.

- Escalation Protocols: Clear protocols for escalating unresolved issues to a higher authority or an expert.

B. Scale and Management

As projects scale, managing a large number of labelers and ensuring quality can become challenging. Leveraging project management tools and maintaining a clear hierarchy for decision-making can help manage this complexity effectively.

Overall, ensuring high-quality labeling in machine learning projects is a multifaceted process involving strategic planning, the use of technology, and continuous improvement. By implementing rigorous quality control processes and consensus-building techniques, organizations can greatly enhance the reliability and performance of their machine learning models.

Reliable Data Labeling Services at Kotwel

As we navigate the challenges of data labeling for machine learning, the need for precise and reliable training data becomes ever more critical. Kotwel stands at the forefront of this field, providing high-quality AI training data services that include data annotation, data validation, and data collection. Our tailored solutions meet the specific needs of each client, ensuring their AI projects are equipped with the best data possible.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

You might be interested in:

Data labeling is a critical component of machine learning that involves tagging data with one or more labels to identify its features or content. As machine learning applications expand, ensuring high-quality data labeling becomes increasingly important, especially when scaling up operations. Poorly labeled data […]

Read MoreMachine learning models are only as good as the data they learn from, making the quality of data labeling a pivotal factor in determining model reliability and effectiveness. This blog post explores the concept of consensus-based labeling and its crucial role in enhancing trust […]

Read MoreContinuous learning in artificial intelligence (AI) is an essential strategy for the ongoing enhancement and refinement of AI models. This iterative process involves experimentation, evaluation, and feedback loops, allowing developers to adapt AI systems to new data, emerging requirements, and changing environments. This article […]

Read More