Accurate and clear data labeling is foundational for developing robust machine learning models. Whether you're a data scientist, project manager, or part of an annotation team, maintaining consistency and clarity in your labeling efforts ensures that your data not only trains models effectively but is also comprehensible and useful for your team. This article explores key strategies for enhancing the quality and efficiency of your data annotation processes.

1. Establish Clear Labeling Guidelines

Set Detailed Standards

Before beginning any annotation project, develop comprehensive guidelines that are accessible to every team member. These should include:

- Definitions and examples for each label, reducing ambiguity in the interpretation of data.

- Contextual rules on how to handle edge cases or uncertain scenarios.

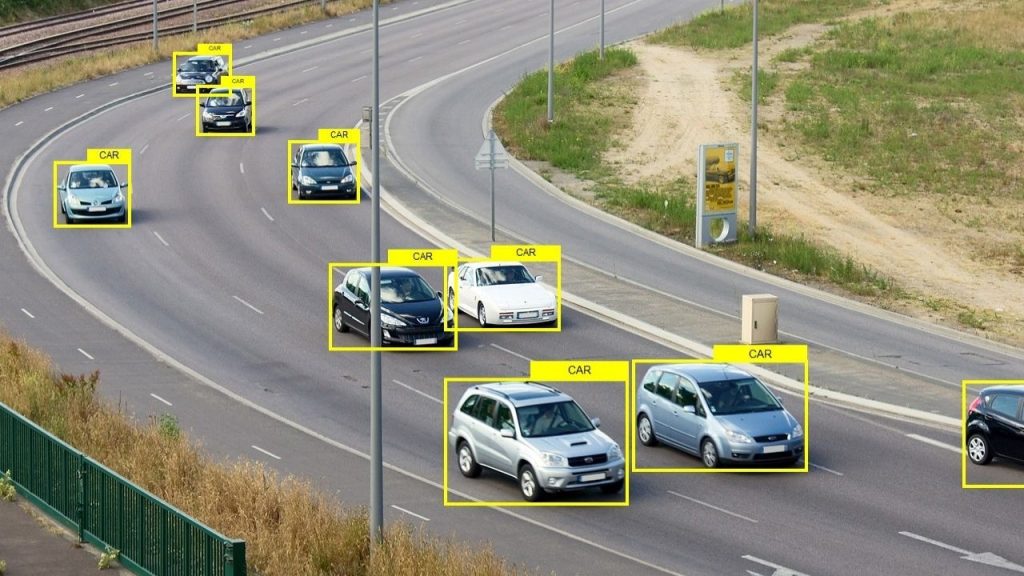

- Visual aids, such as annotated images or diagrams, especially for complex tasks like image segmentation.

Regular Updates & Revisions

Guidelines should not be static; they need to evolve based on real-time feedback and challenges encountered during the labeling process. Ensure that updates are communicated promptly and clearly to all annotators.

2. Use Collaborative & Accessible Tools

Centralized Annotation Platform

Adopt tools that allow multiple annotators to work on the same dataset simultaneously while maintaining version control. Platforms like CVAT, Prodigy, Labelbox, or custom setups in tools like Google Sheets can provide:

- Real-time updates to avoid duplicate work.

- Integrated communication features for quick resolution of doubts or inconsistencies.

- Audit trails to track changes and contributions by individual annotators.

Accessibility & Ease of Use

Choose platforms that are intuitive and require minimal training. This reduces the entry barrier for new team members and decreases the likelihood of errors due to platform complexity.

3. Foster Regular Communication

Regular Check-Ins

Hold regular meetings to discuss progress, address concerns, and share insights that might help refine the labeling process. These can be daily or weekly, depending on the project's intensity and scale.

Feedback Mechanisms

Implement a system where annotators can easily provide feedback on the guidelines or highlight areas of uncertainty. This could be a dedicated channel on communication platforms like Slack or Microsoft Teams.

4. Implement Quality Control Systems

Random Checks

Regularly review a random sample of labeled data to ensure that guidelines are being followed and to identify common errors.

Peer Review

Encourage a culture of peer reviews where annotators cross-check each other's work. This not only helps catch mistakes but also fosters a team-oriented approach to maintaining quality.

Automated Validation Rules

Where possible, integrate automated checks that flag data entries that do not conform to established annotation rules. This can be particularly useful for high-volume text data or structured data forms.

5. Train & Continually Develop Skills

Initial and Ongoing Training

Provide comprehensive training at the start of the project and additional sessions when guidelines are updated or when new types of data are introduced.

Skill Enhancement Workshops

Organize workshops that help annotators understand the nuances of the data and the impact of their work on the machine learning outcomes. This can also include training on new tools and technologies in the data annotation field.

In summary, maintaining clarity in data annotation is not just about meticulous attention to detail—it also involves strategic planning, team collaboration, and ongoing management of the annotation process. By adopting these best practices, teams can ensure high-quality datasets that are pivotal for the success of any machine learning project. Effective data labeling leads to models that are accurate, reliable, and truly reflective of the real-world scenarios they are meant to interpret.

High-quality Data Annotation Services at Kotwel

Ensuring your data is clearly and accurately labeled is crucial for building effective machine learning models. At Kotwel, our data annotation services are designed to support your projects with precision and expertise. Whether you are starting a new project or improving an existing dataset, Kotwel provides the expertise needed to achieve the best results.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

Frequently Asked Questions

You might be interested in:

Audio transcription is a service that transcribes recorded audio and video files into written text. The growth of this field is an outgrowth of the accessibility and increased use of technology. Audio transcription has become easier and more efficient thanks to advances in computer […]

Read MoreContent moderation is different for every company based on their needs, but should still be done if your business operates online. It should include identifying any inappropriate, illegal, or slanderous information posted on your website and providing metrics to help you show that your […]

Read MoreOverview Artificial intelligence, or AI, is the use of computers to simulate human intellectual abilities. Its history dates back to the 1940’s when Alan Turing proposed that intelligent machines could be created through code. Today it is one of the most transforming technologies that […]

Read More