In machine learning (ML) and artificial intelligence (AI), the quality of data labeling directly influences the performance of models. Effective and clear data labeling instructions are crucial for ensuring that human labelers produce consistent, accurate, and high-quality annotations. Here, we explore best practices for crafting labeling instructions that are easy to understand and follow.

1. Clearly Define the Objective

Before beginning any data labeling task, it is essential to define and communicate the purpose of the task to the labelers. Understanding the end goal of the data helps labelers appreciate the importance of their accuracy and attention to detail. Clearly outline what the data will be used for and how their contributions fit into the larger project.

2. Use Simple and Accessible Language

To ensure that instructions are universally understood, use plain language that avoids technical jargon unless absolutely necessary. If technical terms must be used, include a glossary or definitions for easy reference. This approach helps prevent misunderstandings and ensures that non-expert labelers can contribute effectively.

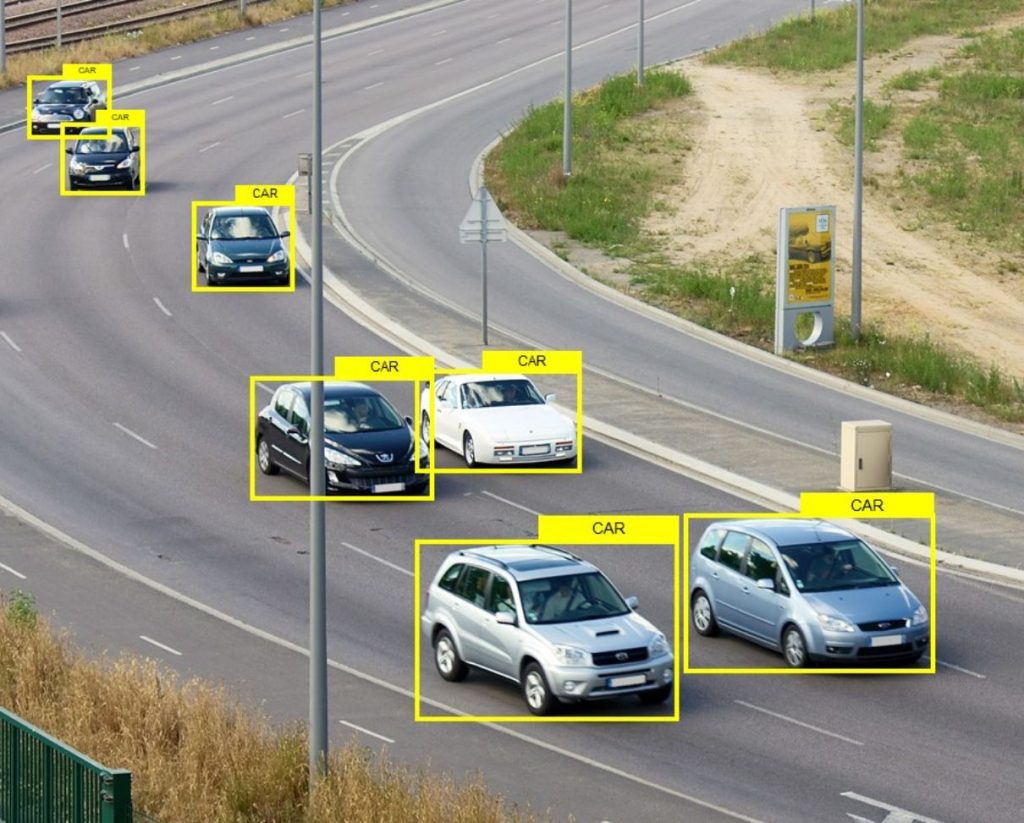

3. Provide Detailed Examples

Examples serve as a practical guide for labelers. Including annotated examples that clearly demonstrate both "do's" and "don'ts" helps clarify expectations. These examples should cover typical scenarios as well as edge cases, thus preparing labelers for the variety of data they might encounter.

4. Highlight Edge Cases

Edge cases often present the most difficulty in labeling tasks. Explicitly describe these scenarios and provide specific instructions on how to handle them. This reduces the risk of inconsistent labeling when faced with ambiguous or complex data.

5. Establish Clear Annotation Guidelines

Develop a detailed guideline document that outlines every step of the annotation process. This document should include:

- The criteria for labeling each type of data

- The tools and software to be used

- Methods for handling uncertainty or ambiguity in data

- Procedures for quality checks and error correction

6. Incorporate Consistency Checks

Consistency is key in data labeling. To achieve this, incorporate mechanisms such as periodic double-checks or having multiple labelers annotate the same data point and then discussing any discrepancies. Regular meetings to review guidelines and resolve ambiguities can also help maintain consistency.

7. Use Visual Aids

Whenever possible, complement written instructions with visual aids. Diagrams, flowcharts, and videos can help illustrate complex processes more clearly than text alone. This is particularly useful for spatial data like images and videos where precise annotations are critical.

8. Iterate and Update Instructions

Data labeling is often an iterative process. As projects evolve, so too should the instructions. Be open to feedback from labelers about what is working and what is not. Use this feedback to refine the instructions continuously, ensuring they remain relevant and effective.

9. Train and Test Before Starting

Before fully deploying the labeling task, conduct training sessions using the instructions. Testing labelers with a small set of data can help identify any gaps in the instructions and provide a real-world test of how well the labelers understand the task.

10. Provide Ongoing Support

Finally, ensure that there is a clear process for labelers to ask questions and receive timely answers. Ongoing support not only helps resolve individual issues but also aids in identifying aspects of the instructions that may require further clarification.

By adhering to these best practices, organizations can significantly improve the quality of their data labeling efforts. Clear, concise, and comprehensive instructions are the foundation of accurate annotations, which in turn, power the development of robust and reliable ML models.

Reliable Data Labeling Services at Kotwel

As we explore the critical role of clear instructions in data labeling, it's important to highlight that not all organizations have the resources or expertise to manage this complex process effectively. This is where Kotwel steps in. We are a leading provider of high-quality AI training data, known for our thorough data annotation, validation, and collection services. Our mission is to support your AI and ML projects by providing tailored solutions that meet your specific needs. Our global team is dedicated to ensuring your projects succeed, and our continued growth is proof of our service quality.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

You might be interested in:

Artificial Intelligence (AI) has been a subject of fascination and debate for decades, with advancements continually pushing the boundaries of what machines can achieve. One area of particular interest is Sentient AI, a concept that has captivated scientists, technologists, and the public alike. But […]

Read MoreData annotation, a crucial process in machine learning and artificial intelligence development, relies heavily on the human-in-the-loop approach. This methodology integrates human judgment and expertise into the data labeling process, enhancing the quality and reliability of annotated datasets. What is Human-in-the-Loop Annotation? Human-in-the-loop annotation […]

Read MoreAfter laying strong foundations in vibrant Vietnam, we’re taking the next significant leap-officially forming Kotwel LLC in Delaware, USA. Global Expansion Delaware, renowned for its business-friendly environment, offers great opportunities for Kotwel to accelerate our growth trajectory, foster strategic partnerships, and better serve our […]

Read More