Ensuring the quality of data labeling is crucial in developing reliable machine learning models. This article outlines best practices in quality assurance for data labeling, emphasizing error detection, consensus building among labelers, and quality control measures to maintain data integrity.

1. The Important Role of Data Labeling Quality Assurance

Data labeling involves annotating data with labels that help machine learning models learn to predict outcomes accurately. The quality of these labels directly impacts the performance and reliability of the models. Therefore, implementing robust quality assurance processes is essential for ensuring the accuracy and integrity of labeled data.

2. Error Detection Techniques

Detecting errors early in the data labeling process is crucial to maintain the quality of the dataset. Here are some effective techniques:

- Automated Validation Rules: Implementing automated checks that validate labels against pre-defined rules can help catch inconsistencies and errors quickly.

- Spot Checking: Regularly reviewing a random sample of labeled data can help identify errors that automated rules might miss.

- Error Tracking Systems: Utilizing a system to track and categorize errors can aid in analyzing patterns and common mistakes, facilitating targeted improvements in the labeling process.

3. Consensus Building Among Labelers

Discrepancies among labelers can significantly affect data consistency. To build consensus, consider the following strategies:

- Standardized Training: Ensure all labelers undergo comprehensive training that includes clear guidelines on the labeling process, with real-world examples and regular refresher courses.

- Regular Calibration Meetings: Conduct meetings where labelers can discuss challenging cases and align on the best practices for labeling.

- Consensus Thresholds: Use techniques like majority voting for labels where labeler agreement is essential, ensuring that data points are only included once a certain level of consensus is reached.

4. Implementing Quality Control Measures

Quality control is an ongoing process that helps maintain the integrity of the data labeling process. Key measures include:

- Double-Blind Labeling: Having two labelers annotate the same data independently and comparing their labels can identify discrepancies and areas where additional guidance is needed.

- Continuous Feedback Loops: Integrating feedback from downstream model performance can help refine labeling guidelines and improve label accuracy.

- Quality Metrics: Develop metrics such as inter-annotator agreement rates to quantitatively assess label quality and identify areas for improvement.

5. Leveraging Technology in Quality Assurance

Technology plays a pivotal role in enhancing the efficiency and effectiveness of quality assurance in data labeling:

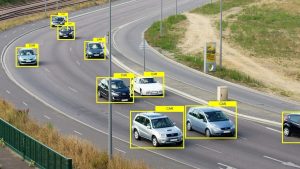

- Machine Learning Assistance: Utilizing machine learning tools to pre-label data can speed up the labeling process and reduce human error.

- Automated Quality Checks: Implementing software that automatically checks for common labeling errors can greatly reduce the need for manual reviews.

- Real-Time Monitoring Tools: Using tools that provide real-time insights into the labeling process can help managers identify and address issues promptly.

Quality assurance in data labeling is not just about catching errors but about creating a systematic process that enhances the overall reliability and consistency of labeled data. By employing a combination of error detection techniques, consensus-building strategies, and quality control measures, organizations can ensure the high quality of data essential for training robust machine learning models. These practices not only improve the accuracy of models but also contribute to the efficiency and scalability of the data labeling process.

Reliable Data Labeling Services at Kotwel

To further enhance data labeling quality, it's essential to partner with a trusted provider like Kotwel. Our dedication to quality and accuracy establishes us as a reliable partner for AI projects of any size, ensuring your data-driven solutions are built on accurate and consistent foundations.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.

You might be interested in:

Data labeling is a critical component of machine learning that involves tagging data with one or more labels to identify its features or content. As machine learning applications expand, ensuring high-quality data labeling becomes increasingly important, especially when scaling up operations. Poorly labeled data […]

Read MoreMachine learning models are only as good as the data they learn from, making the quality of data labeling a pivotal factor in determining model reliability and effectiveness. This blog post explores the concept of consensus-based labeling and its crucial role in enhancing trust […]

Read MoreContinuous learning in artificial intelligence (AI) is an essential strategy for the ongoing enhancement and refinement of AI models. This iterative process involves experimentation, evaluation, and feedback loops, allowing developers to adapt AI systems to new data, emerging requirements, and changing environments. This article […]

Read More