Data annotation, a crucial process in machine learning and artificial intelligence development, relies heavily on the human-in-the-loop approach. This methodology integrates human judgment and expertise into the data labeling process, enhancing the quality and reliability of annotated datasets.

What is Human-in-the-Loop Annotation?

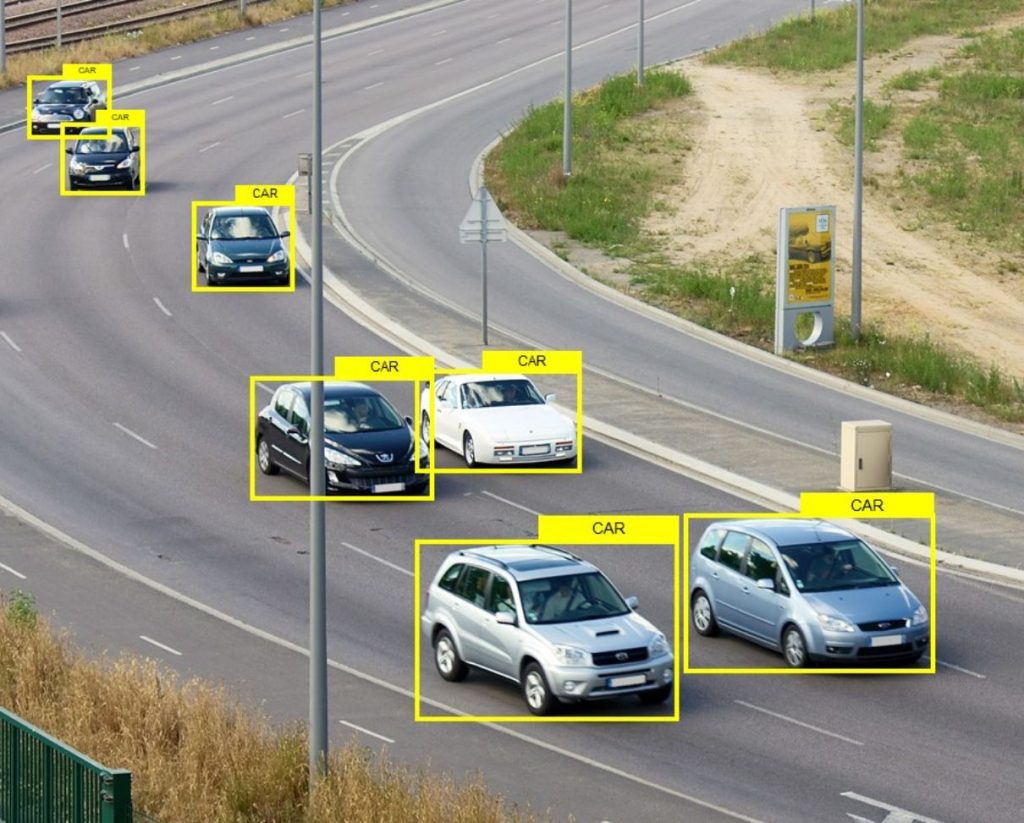

Human-in-the-loop annotation involves the active participation of human annotators in labeling datasets. These annotators, often trained professionals or crowd workers, interpret and classify data according to predefined criteria. By leveraging human intelligence, this approach addresses complex tasks that are challenging for automated systems alone.

Advantages of Human-in-the-Loop Annotation

- Complex Data Interpretation: Human annotators excel in understanding nuanced contexts and intricacies within datasets, enabling accurate labeling of diverse data types such as images, text, and audio.

- Adaptability to Ambiguity: In scenarios where data ambiguity exists, human annotators can make informed decisions based on contextual understanding and domain knowledge, reducing labeling errors.

- Continuous Learning and Improvement: Human-in-the-loop annotation facilitates iterative learning cycles, allowing annotators to refine labeling strategies based on feedback and evolving project requirements.

Challenges and Considerations

Despite its advantages, human-in-the-loop annotation presents several challenges:

- Subjectivity: Human annotators may exhibit subjective biases or interpretations, leading to inconsistencies in labeling, necessitating rigorous quality assurance measures.

- Scalability: Manual annotation processes can be time-consuming and resource-intensive, limiting scalability for large datasets or time-sensitive projects.

- Cost: Hiring and training human annotators incur financial costs, especially for specialized or domain-specific tasks.

Integration with Automated Techniques

To address scalability and efficiency challenges, human-in-the-loop annotation often integrates with automated techniques such as active learning and semi-supervised learning. These approaches leverage machine learning algorithms to prioritize uncertain or challenging data samples for human review, optimizing annotation efforts.

Bridging Human Expertise and Cutting-Edge Technology

Outsourcing to a reputable service provider, such as Kotwel, presents an effective solution to address the challenges associated with human-in-the-loop annotation. By leveraging the expertise and infrastructure of a trusted partner, organizations can overcome issues related to scalability, cost, and quality assurance. Kotwel, with its global presence and commitment to delivering high-quality data annotation services, stands as a reliable choice for outsourcing data annotation tasks, ensuring efficient, accurate, and cost-effective solutions for diverse projects.

Visit our website to learn more about our services and how we can support your innovative AI projects.

Kotwel is a reliable data service provider, offering custom AI solutions and high-quality AI training data for companies worldwide. Data services at Kotwel include data collection, data labeling (data annotation) and data validation that help get more out of your algorithms by generating, labeling and validating unique and high-quality training data, specifically tailored to your needs.